Streamlining Data Pipelines: A Guide to ETL and ELT

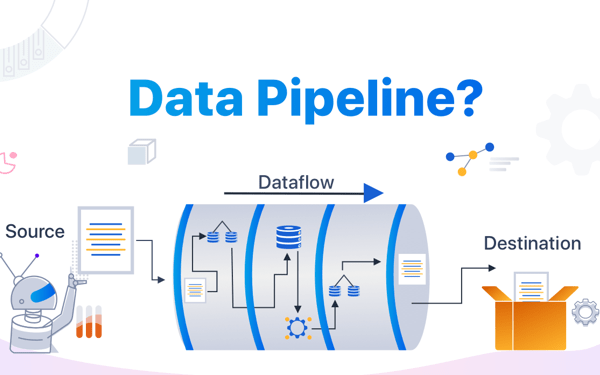

Data pipelines are integral for managing data flow, involving ingestion, storage, processing, analysis, and visualization. In the integration process, data is ingested from diverse sources, with real-time and batch options. Storage in data warehouses or lakes follows ingestion, with technologies like Hadoop and Amazon S3. Processing involves cleaning and transforming using tools like Apache Spark, while analysis employs SQL, Python, or R. Visualization tools such as Tableau convey insights. The article delves into ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) approaches, emphasizing factors like data volume, transformation timing, and infrastructure for optimal data pipeline efficiency.