In today's data-driven world, where every second counts, businesses need tools and technologies that enable instant insights. Real-time data processing with streaming technologies has emerged as a transformative force, reshaping industries and empowering organizations to act on data as it happens. In this blog, we’ll explore what real-time data processing is, the role of streaming technologies, their advantages, and their practical applications.

What is Real-Time Data Processing?

Real-time data processing is the ability to ingest, analyze, and act upon data as it is generated, rather than waiting for it to be stored and processed later. Unlike traditional batch processing, which operates on large data sets at scheduled intervals, real-time processing delivers insights almost instantaneously.

For example, think of financial systems detecting fraudulent transactions or ride-hailing apps updating driver locations in real-time. These systems rely on streaming technologies to process massive data flows without delay.

The Core of Real-Time Data Processing

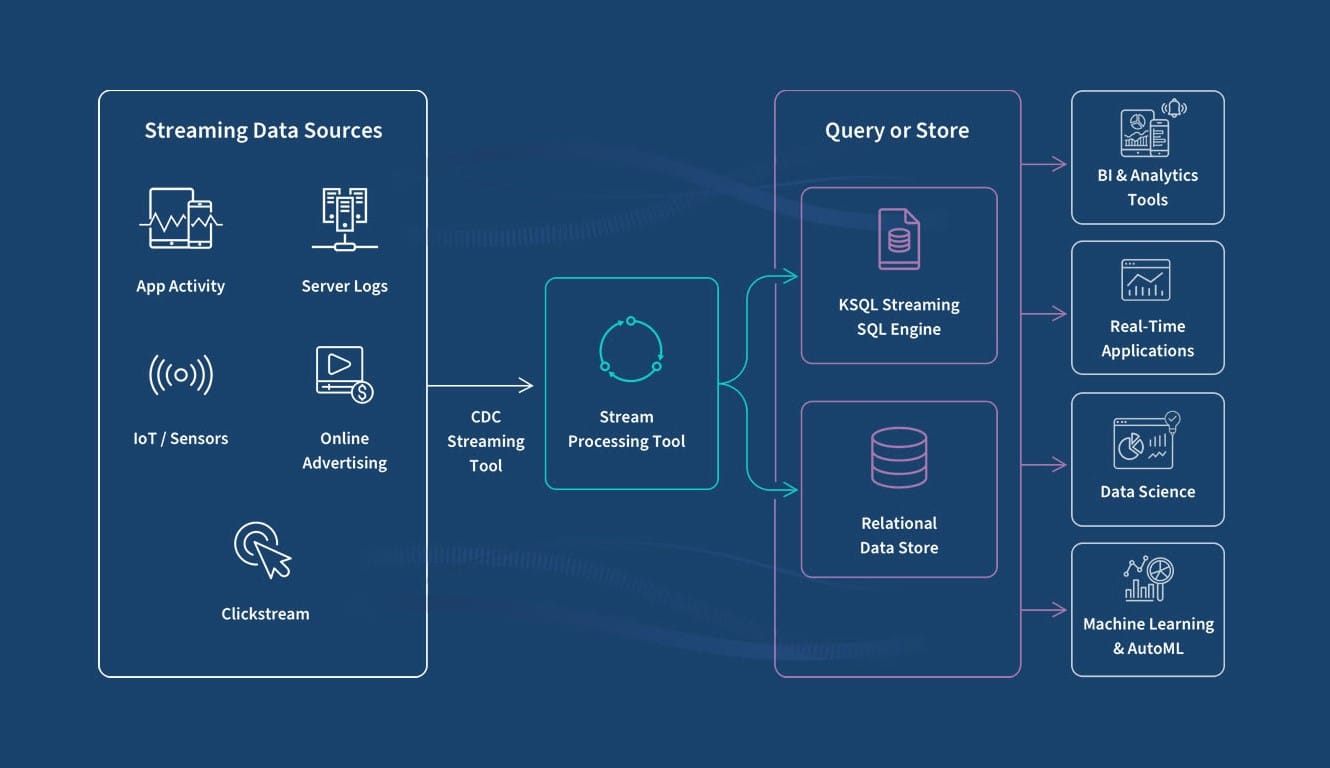

Streaming technologies are at the heart of real-time data processing. These platforms allow data to flow continuously from sources like IoT sensors, social media feeds, and application logs to analytics and decision-making systems.

Some leading streaming technologies include:

Apache Kafka: A distributed event-streaming platform known for its scalability and durability.

Apache Flink: A framework for stateful computations over data streams.

Amazon Kinesis: A cloud-based service designed for real-time data ingestion and analysis.

Google Cloud Dataflow: A fully managed service for batch and streaming data processing.

Apache Storm: A distributed system for processing streams of data in real-time.

Advantages of Real-Time Data Processing

The adoption of streaming technologies is proliferating due to the significant advantages they offer.

Here’s a look at some of their key benefits:

Instant Decision-Making Businesses can respond to critical events as they happen, whether it's mitigating a security threat or seizing a fleeting market opportunity.

Enhanced Customer Experience Real-time data processing enables personalized recommendations, faster responses, and improved customer satisfaction.

Operational Efficiency Organizations can monitor and optimize processes continuously, reducing downtime and inefficiencies.

Scalability Modern streaming technologies are designed to handle high-throughput data streams, making them ideal for businesses of any size.

Competitive Edge By leveraging real-time insights, companies can stay ahead of competitors in fast-changing markets.

Key Technologies for Real-Time Data Processing

To effectively process real-time data streams, organizations rely on a variety of technologies:

Message Brokers:

Apache Kafka: A distributed streaming platform that handles high-volume, high-velocity data streams.

RabbitMQ: A flexible message broker that supports various messaging patterns, including publish-subscribe and point-to-point.

Stream Processing Engines:

Apache Flink: A powerful framework for processing real-time and batch data streams.

Apache Spark Streaming: A component of the Apache Spark ecosystem that enables real-time stream processing.

Kafka Streams: A library built on top of Apache Kafka for building real-time data streaming applications.

Data Storage Systems:

Time Series Databases: Specialized databases designed to efficiently store and query time-series data.

NoSQL Databases: Flexible databases that can handle large volumes of unstructured or semi-structured data.

Applications of Real-Time Data Processing

Real-time data processing is being adopted across diverse industries.

Here are some notable examples:

Financial Services Banks and fintech companies use real-time systems to detect fraud, analyze transactions, and execute high-frequency trades.

Healthcare Streaming technologies power real-time patient monitoring systems, enabling doctors to make timely decisions in critical situations.

E-commerce Online retailers leverage real-time analytics for inventory management, dynamic pricing, and personalized product recommendations.

Transportation and Logistics Ride-sharing apps and logistics firms optimize routes, track shipments, and manage fleet operations in real time.

Telecommunications Network providers use real-time data to monitor service quality and address outages proactively.

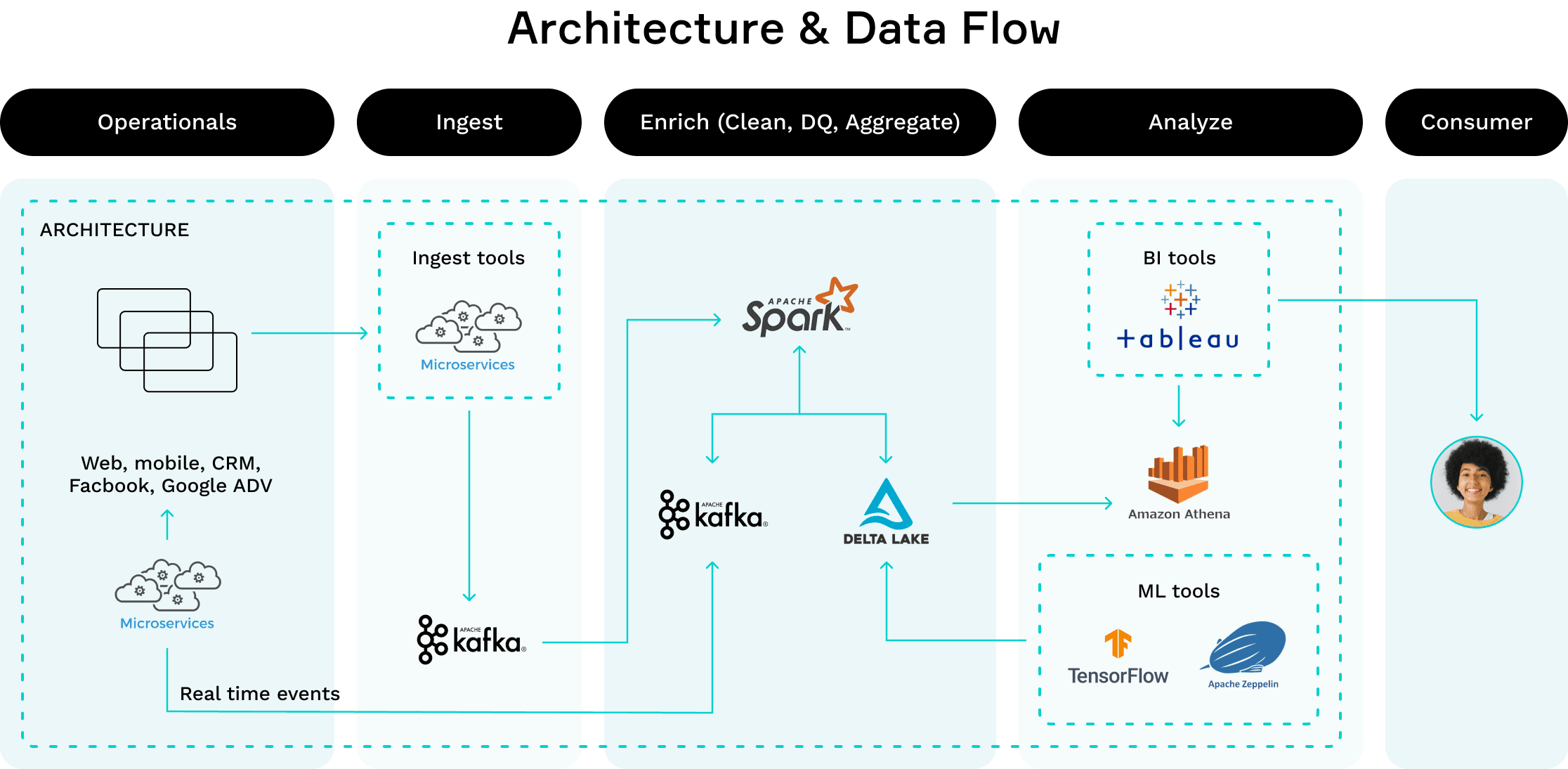

Building a Real-Time Data Pipeline

A typical real-time data pipeline involves the following steps:

Data Ingestion:

Data Sources: Identify and connect to various data sources, such as IoT devices, web servers, and social media platforms.

Data Capture: Use tools like Kafka Connect or custom connectors to capture data streams and feed them into the message broker.

Data Processing:

Data Transformation: Apply transformations like filtering, aggregation, and joining to extract relevant information.

Data Enrichment: Combine data from multiple sources to create a richer data context.

Data Analysis: Perform real-time analytics using techniques like time series analysis, anomaly detection, and machine learning.

Data Storage:

Persisting Data: Store processed data in databases or data warehouses for further analysis and reporting.

Archiving Data: Archive historical data for long-term storage and compliance purposes.

Data Visualization and Alerting:

Real-Time Dashboards: Create interactive dashboards to visualize key metrics and trends.

Alerting Systems: Set up alerts to notify stakeholders of critical events or anomalies.

Challenges in Real-Time Data Processing

While the benefits are compelling, implementing real-time data processing comes with its share of challenges:

Data Volume and Velocity Handling high-speed data streams requires robust infrastructure and efficient algorithms.

Latency Minimizing delays in data processing is critical to achieving true real-time performance.

Data Accuracy Ensuring data quality and consistency in a streaming environment is complex.

Integration with Legacy Systems Integrating modern streaming technologies with existing systems can be a technical hurdle.

Cost Building and maintaining a real-time data pipeline can be resource-intensive.

Best Practices for Implementing Streaming Technologies

To harness the full potential of real-time data processing, follow these best practices:

Understand Your Data Sources Identify where your data is coming from and ensure compatibility with streaming platforms.

Choose the Right Technology Select a streaming technology that aligns with your scalability, latency, and processing needs.

Focus on Scalability Design your architecture to handle increasing data volumes without compromising performance.

Ensure Data Security Implement robust encryption and access control measures to safeguard sensitive information.

Leverage Cloud Services Utilize cloud-based streaming services to reduce infrastructure costs and improve reliability.

The Future of Real-Time Data Processing

As businesses continue to embrace digital transformation, the importance of real-time data processing will only grow. Emerging trends such as edge computing, AI-driven analytics, and 5G connectivity are poised to make real-time processing even more accessible and powerful.

Imagine a world where self-driving cars communicate with traffic systems in real time, or where smart factories optimize production on the fly. These possibilities highlight the immense potential of streaming technologies to revolutionize industries.

Conclusion

Real-time data processing with streaming technologies is no longer a luxury—it’s a necessity in the fast-paced digital age. From improving customer experiences to enabling instant decision-making, the advantages are clear. By understanding the tools and best practices, businesses can unlock the full potential of their data streams and stay ahead in an increasingly competitive landscape.

Dot Labs is a leading IT outsourcing firm renowned for its comprehensive services, including cutting-edge software development, meticulous quality assurance, and insightful data analytics. Our team of skilled professionals delivers exceptional nearshoring solutions to companies worldwide, ensuring significant cost savings while maintaining seamless communication and collaboration. Discover the Dot Labs advantage today!

Visit our website: www.dotlabs.ai, for more information on how Dot Labs can help your business with its IT outsourcing needs.

For more informative Blogs on the latest technologies and trends click here