In today’s fast-evolving digital landscape businesses are adopting multi-cloud and hybrid computing strategies to maximize flexibility, scalability, and efficiency. This paradigm shift presents opportunities and challenges, requiring innovative approaches to design, manage, and optimize data pipelines across diverse platforms. This blog explores how data engineering adapts multi-cloud and hybrid computing, offering actionable insights and best practices to navigate this complex ecosystem.

Understanding Multi-Cloud and Hybrid Computing

Before diving into the specifics of data engineering, it's crucial to grasp the fundamental concepts of multi-cloud and hybrid computing.

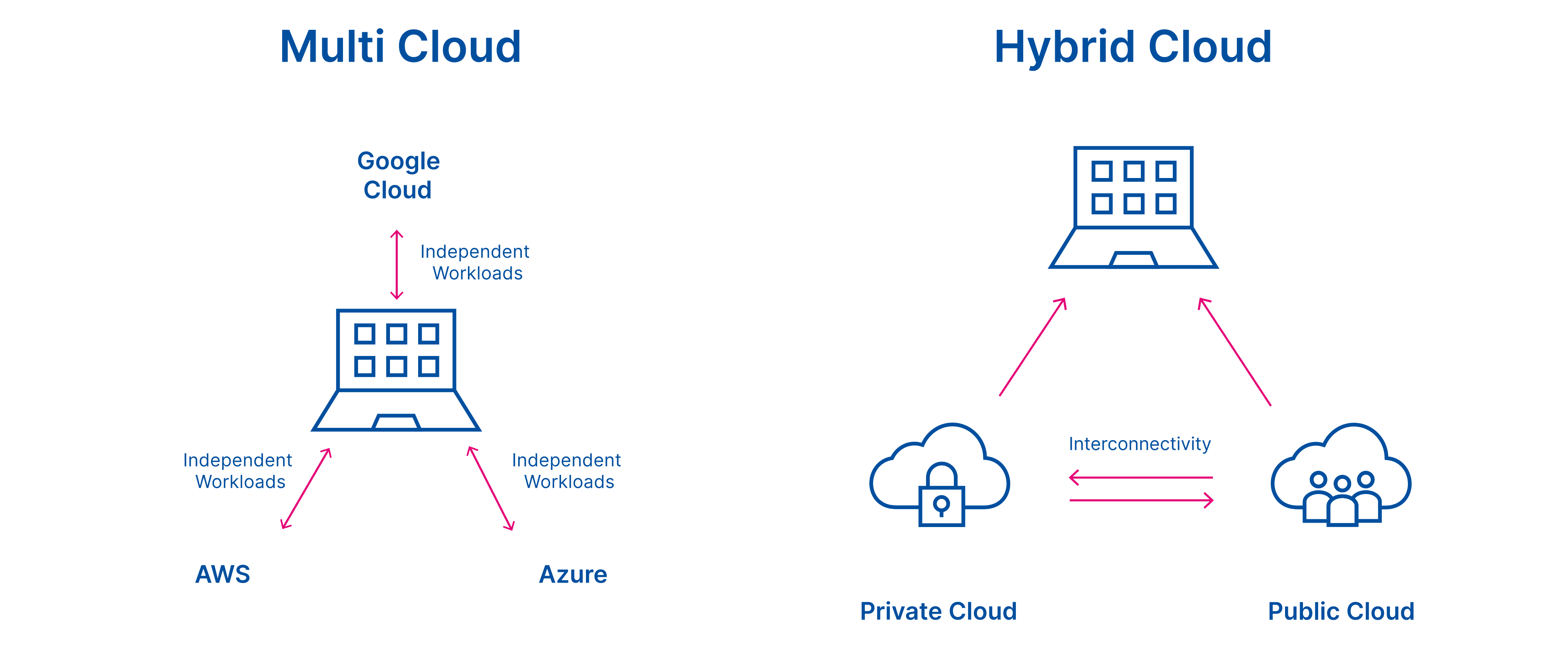

Multi-Cloud Computing

Multi-cloud computing refers to the strategic utilization of multiple cloud service providers within a single organization. This approach offers several advantages, including:

Vendor Lock-In Mitigation: By diversifying across multiple providers, organizations can reduce reliance on any single vendor, mitigating the risk of vendor lock-in.

Optimal Resource Allocation: Different cloud providers offer specialized services and strengths. By selecting the best provider for specific workloads, organizations can optimize resource allocation and cost-efficiency.

Enhanced Disaster Recovery: Distributing workloads across multiple cloud providers can improve disaster recovery capabilities, as failure in one provider is less likely to impact the entire infrastructure.

Hybrid Computing

Hybrid computing combines the best of both worlds: on-premises infrastructure and cloud-based services. This approach allows organizations to maintain control over sensitive data while leveraging the scalability and flexibility of the cloud. Key benefits of hybrid computing include:

Data Sovereignty: Organizations can keep sensitive data within their data centers, ensuring compliance with data privacy regulations.

Legacy System Integration: Hybrid environments facilitate the seamless integration of legacy systems with modern cloud-based applications.

Gradual Migration: Hybrid computing enables organizations to migrate to the cloud at their own pace, reducing the risks associated with large-scale migrations.

The Role of Data Engineering in Multi-Cloud and Hybrid Environments

Data engineering involves designing, building, and maintaining systems for collecting, storing, and analyzing data. In multi-cloud and hybrid environments this role becomes more dynamic as engineers must manage:

Distributed Data Pipelines: Ensuring seamless data flow across varied environments.

Interoperability: Enabling tools and platforms to work together despite differing ecosystems.

Scalability: Adapting systems to handle fluctuating workloads efficiently.

Security and Compliance: Safeguarding data while adhering to regional and industry-specific regulations.

Data Engineering Challenges in Multi-Cloud and Hybrid Environments

While multi-cloud and hybrid computing offers numerous advantages, they also introduce unique challenges for data engineers:

Data Consistency and Synchronization: Maintaining data consistency across multiple cloud environments can be complex when data is replicated or synchronized between different systems.

Data Security and Governance: Ensuring data security and compliance with regulations becomes more challenging in multi-cloud and hybrid environments, as data may reside in various locations and be accessed by different users.

Data Integration and ETL: Integrating data from diverse sources, including on-premises systems and multiple cloud providers, requires robust data integration and ETL (Extract, Transform, Load) processes.

Data Governance and Metadata Management: Establishing and maintaining data governance policies and managing metadata across multiple environments can be complex.

Performance Optimization: Optimizing data pipelines and queries in multi-cloud and hybrid environments can be challenging due to varying network latencies and resource constraints.

Best Practices for Data Engineering in Multi-Cloud and Hybrid Systems

To address these challenges and effectively leverage the benefits of multi-cloud and hybrid computing, data engineers should adopt the following best practices:

Adopt a Unified Data Architecture

A unified architecture ensures consistency in data handling and reduces silos. Technologies like data fabric or data mesh provide frameworks to integrate and govern data across platforms.

Implement Data Virtualization

Data virtualization enables seamless access to distributed data without physical movement, reducing latency and complexity.

Leverage Cloud-Native Tools

Most cloud providers offer native tools like AWS Glue, Azure Data Factory, or Google Cloud Dataflow. Using these tools can simplify pipeline design and improve integration.

Automate Wherever Possible

Automation tools streamline repetitive tasks, enhance pipeline reliability, and free up engineers for higher-value work.

Prioritize Security and Compliance

Use encryption, identity management, and region-specific configurations to protect data and meet regulatory requirements.

Monitor and Optimize Costs

Regularly review resource usage to identify inefficiencies. Multi-cloud cost management platforms like CloudHealth or Spot.io can be valuable.

Emerging Trends in Multi-Cloud and Hybrid Data Engineering

Kubernetes and Containerization

Kubernetes has become a standard for managing containerized applications across clouds. For data engineers, it simplifies the deployment of scalable and portable data processing solutions.

Edge Computing

With data generated at the edge, hybrid systems are leveraging edge computing to process and analyze data locally before syncing with central systems.

AI-Driven Data Engineering

AI tools are automating tasks like anomaly detection in pipelines, schema mapping, and query optimization, making data engineering more efficient.

Serverless Data Pipelines

Serverless architectures allow engineers to focus on building pipelines without worrying about infrastructure management, ensuring cost-efficiency and scalability.

Case Study: Multi-Cloud Success Story

A Retail Giant Adopts Multi-Cloud for Resilient Data Pipelines

A global retailer used AWS for operational data, Azure for analytics, and Google Cloud for AI-driven insights. By adopting a multi-cloud strategy:

Data engineers integrated systems using APIs and ETL pipelines.

Cloud-native tools streamlined data preparation and analytics.

A unified data fabric enabled seamless access and governance.

The result? Faster insights, reduced downtime, and enhanced customer satisfaction.

How to Future-Proof Your Data Engineering Strategy?

Invest in Skill Development

Encourage continuous learning in areas like Kubernetes, cloud services, and automation.

Adopt Open Standards

Use technologies that support interoperability, such as Apache Kafka for event streaming or Apache Arrow for columnar data interchange.

Build for Flexibility

Design pipelines with modularity in mind, allowing easy updates or reconfiguration as needs evolve.

Collaborate Across Teams

Encourage cross-functional collaboration between data engineers, analysts, and DevOps teams to align goals and strategies.

Conclusion

Data engineering in the age of multi-cloud and hybrid computing is not just a technical challenge—it’s a strategic opportunity. By adopting innovative tools, fostering collaboration, and prioritizing flexibility, businesses can unlock the full potential of their data. Whether it’s enabling real-time analytics, improving decision-making, or ensuring compliance, effective data engineering is at the heart of digital transformation.

FAQs

Q: How can small businesses benefit from multi-cloud strategies?

Small businesses gain flexibility and cost savings by choosing providers that best meet their needs.

Q: What is the future of hybrid computing?

Hybrid computing will continue to grow, especially with advancements in edge computing and 5G, making it a critical part of the IT landscape.

Q: Are there risks in multi-cloud strategies?

Yes, including increased complexity, potential security gaps, and cost management challenges. However, proper planning and tools can mitigate these risks.

Dot Labs is a leading IT outsourcing firm renowned for its comprehensive services, including cutting-edge software development, meticulous quality assurance, and insightful data analytics. Our team of skilled professionals delivers exceptional nearshoring solutions to companies worldwide, ensuring significant cost savings while maintaining seamless communication and collaboration. Discover the Dot Labs advantage today!

Visit our website: www.dotlabs.ai, for more information on how Dot Labs can help your business with its IT outsourcing needs.

For more informative Blogs on the latest technologies and trends click here