In today's data-driven world,

organizations are constantly seeking innovative solutions to handle the

ever-increasing volume of data. Two prominent open-source technologies, Apache

Kafka and Apache Spark, have gained significant attention for their

capabilities in managing and processing data efficiently. However, it's

essential to understand their distinct purposes and functionalities to make

informed decisions about their adoption in data projects.

Purpose

and Core Functionality:

Apache Kafka: The Data

Pipeline Backbone

Apache Kafka is a distributed event

streaming platform designed to serve as the backbone of data pipelines. Its

primary purpose is to ingest, store, and distribute data streams in real-time.

Kafka excels in handling high-throughput, low-latency data streaming and

ensures fault tolerance, scalability, and durability of data.

Think of Kafka as a robust message broker that enables data producers to publish data, while consumers subscribe to the topics of interest. It acts as a reliable intermediary, facilitating real-time data exchange between different parts of your data ecosystem.

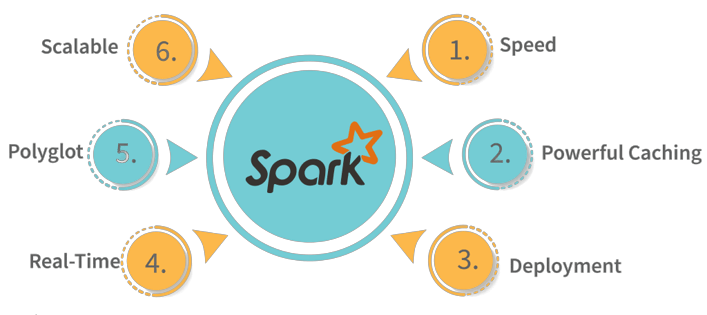

Apache Spark: The Data

Processing Powerhouse

On the other hand, Apache Spark is

a versatile data processing framework. While it can handle real-time stream

processing, Spark's capabilities extend far beyond that. Spark is designed for

various data processing tasks, including batch processing, real-time stream

processing, machine learning, and graph processing.

Spark processes data in parallel across a cluster, making it well-suited for tasks that require distributed computing, complex data transformations, and advanced analytics. It can integrate with various data storage solutions and offers a wide range of libraries and APIs for different use cases.

Data

Storage:

Apache Kafka: Kafka is not intended

for long-term data storage. It retains data for a configurable period but does

not provide extensive storage capabilities. Its primary focus is on the

real-time movement of data through pipelines.

Apache Spark: Spark itself does not

store data; instead, it integrates seamlessly with various storage solutions

such as HDFS, Apache HBase, Cassandra, and more. This flexibility allows

organizations to choose the most suitable storage infrastructure for their

specific needs.

Processing

Speed:

Apache Kafka: Kafka is designed for

low-latency, high-throughput data streaming. It excels at real-time event

processing and is ideal for use cases where immediate data availability is crucial.

Apache Spark: Spark can handle

real-time data processing using its Spark Streaming module, but it also

supports batch processing and micro-batch processing. This versatility makes

Spark suitable for a wide range of use cases, including both real-time and

offline data processing.

Use

Cases:

Apache Kafka: Common use cases for

Kafka include event sourcing, log aggregation, real-time analytics, and

building data pipelines. It plays a pivotal role in ensuring data consistency

and availability across systems.

Apache Spark: Spark's use cases

span across data ETL (Extract, Transform, Load), data warehousing, machine

learning, graph analytics, and more. Its broad applicability makes it a go-to

solution for organizations with diverse data processing requirements.

Conclusion:

Apache Kafka and Apache Spark are

complementary technologies that address different aspects of the data

processing ecosystem. Kafka specializes in data ingestion and real-time data

movement, acting as a robust messaging system. In contrast, Spark offers a

powerful data processing engine that can handle various tasks, from batch

processing to machine learning.

Organizations often use both Kafka and Spark in conjunction to build end-to-end data pipelines that encompass real-time data ingestion, processing, and analysis. Understanding the unique strengths and use cases of each technology is essential for making informed decisions and architecting efficient data solutions in today's data-driven landscape.

Dot Labs is an IT outsourcing firm that offers a range of services, including software development, quality assurance, and data analytics. With a team of skilled professionals, Dot Labs offers nearshoring services to companies in North America, providing cost savings while ensuring effective communication and collaboration.

Visit our website: www.dotlabs.ai, for more information on how Dot Labs can help your business with its IT outsourcing needs.

For more informative Blogs on the latest technologies and trends click here