When conducting the data processes and manipulating data. It is considered that your results will be as good as the type of data you use, such as bad quality data cannot give someone good results. Essentially,

Garbage data in is Garbage analysis out.

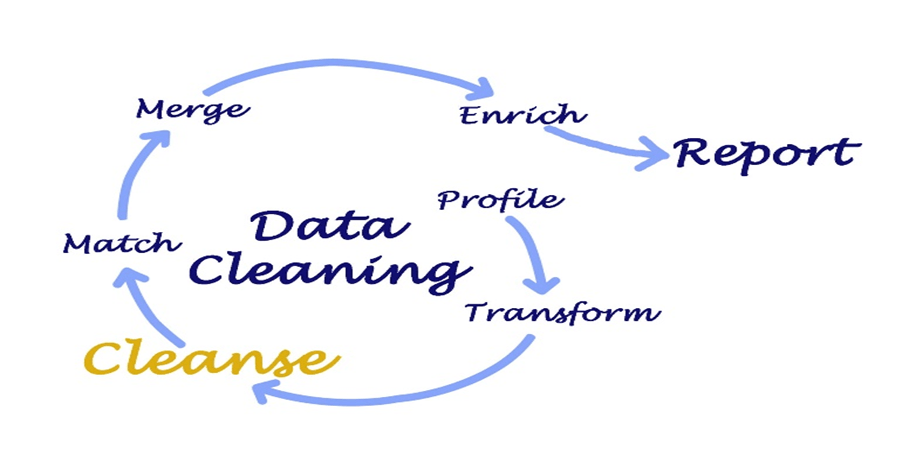

So, for the sake of improving the quality of the data we need to clean the data. By cleaning data, we mean removing incorrect, corrupted, incorrectly formatted, duplicate, or incomplete data within a dataset. Data cleaning also referred to as data cleansing and data scrubbing, is one of the most important steps for your organization if you want to create a culture around quality data decision-making.

In today's data-driven world, the importance of clean

and accurate data cannot be overstated. Poor data quality can lead to costly

errors, hinder decision-making, and erode customer trust. To ensure your data

is reliable and consistent, Data Quality Management (DQM) techniques and tools

play a pivotal role.

The data landscape is booming, but with great data comes great responsibility, responsibility to ensure its quality. Data Quality Management (DQM) is undergoing a metamorphosis, fueled by advancements in Artificial Intelligence (AI), Machine Learning (ML), and cloud-based solutions.

Here's how these trends are reshaping the DQM sphere:

AI and ML: From Reactive to Proactive Data Cleansing

Gone are the days of manually sifting through data for errors. AI and ML algorithms are now intelligent data detectives, continuously scanning datasets for anomalies and inconsistencies. This proactive approach empowers businesses to identify and rectify issues before they snowball into downstream problems.

Automated Machine Learning (AutoML) Democratizes Data Quality

Building data quality pipelines requires significant coding expertise. AutoML throws open the doors to a wider audience by automating the creation and deployment of data quality rules. Business analysts and data stewards can now wield the power of DQM without needing to be data scientists.

Cloud-Based DQM: Scalability on Demand

As data volumes balloon, traditional on-premise DQM solutions can struggle to keep pace. Cloud-based tools offer a breath of fresh air. They are inherently scalable, elastically adapting to your data ingestion needs. Additionally, the cloud fosters collaboration, allowing geographically dispersed teams to work on data quality initiatives seamlessly.

User-Centric Design: Empowering the Citizen Data Steward

DQM is no longer the sole domain of technical specialists. User-friendly interfaces with drag-and-drop functionalities are empowering citizen data stewards – business users who champion data quality within their domains. This fosters a data-driven culture where everyone is accountable for data integrity.

Let’s explore various techniques and tools used to maintain clean data.

Data

Quality Management Techniques:

Data Profiling:

Data profiling is a data analysis process that involves a comprehensive examination of data, encompassing an investigation into its structural composition, content, and data quality. Utilizing specialized profiling tools facilitates the identification of outliers, irregularities, and inconsistencies, and the detection of missing values within datasets. This essential technique is indispensable in the holistic evaluation of your data's overall well-being and reliability.

Data

Standardization:

Data standardization is a data processing workflow that involves establishing uniform formats, naming conventions, and coding schemes for data elements such that it converts the structure of different datasets into one common format of data. It deals with the transformation of datasets after the data are collected from different sources and before it is loaded into target systems. This ensures consistency and facilitates data integration across different systems and sources.

Data Validation:

Data

validation checks data for accuracy and conformity to predefined rules before

using it to train your machine learning models. Validation techniques include

format validation, range checks, and referential integrity checks to ensure

data meets specific criteria. Data validation is essential because, if your

data is bad, your results will be, too.

Data Enrichment:

Data enriching, or data enrichment refers to the process of augmenting your raw/ existing data with additional information to make it more useful. You can do this in several ways. This can include geocoding, adding demographic data, or linking records to external data sources to provide more context and value. One of the most basic and common ways is by combining data from different sources.

Master Data

Management (MDM):

Master data management (MDM) is a technology-enabled discipline in which business and IT work together to ensure the uniformity, accuracy, semantic consistency, and accountability of the enterprise's official shared master data assets (such as customers, products, and employees) in a centralized repository. Your organization may require fundamental changes in its business processes to maintain clean master data.

Data Quality

Scorecards:

Scorecards provide a visual representation of

data quality metrics and key performance indicators (KPIs), they summarize and

communicate the data quality indicators and data cleansing metrics concisely and visually. They help organizations monitor data quality over time and

identify areas that require improvement in the cleansing initiatives.

Data Governance:

Data governance is a comprehensive strategy for managing data quality. The process involves managing the availability, usability, integrity, and security of the data in enterprise systems, based on internal data standards and policies that also control data usage. policies, procedures, and roles for ensuring data quality and compliance with regulations. Effective data governance ensures that data is consistent and trustworthy and doesn't get misused.

Data

Quality Management Tools

There are many data cleaning tools available in the market that are found to be most effective in enhancing the data quality and their results. Some of the most effective tools for data cleaning are OpenRefine, Trifacta, Informatica Data Quality, Talend Data Quality, SAS Data Management, Microsoft Data Quality Services, Melissa Dara Quality Suite, and many more.

OpenRefine:

OpenRefine,

previously known as GoogleRefine, is a powerful, open-source data cleaning and

transformation tool that visualizes and manipulates large quantities of

data all at once. It allows users to explore, clean, and transform data easily,

making it a valuable asset for data quality improvement. It looks like a

spreadsheet, but operates like a database, allowing for increased discovery

capabilities beyond programs like Microsoft Excel.

Trifacta:

Trifacta

offers a user-friendly, visual interface for data wrangling and cleaning. It

helps data analysts and business users clean and prepare data without extensive

technical skills. Dataprep by Trifacta

is an intelligent data service for visually exploring, cleaning and

preparing structured and unstructured data for analysis, reporting, and machine

learning.

Informatica Data Quality:

Informatica

provides a suite of data quality tools, including data profiling, data

cleansing, and data enrichment. It's widely used in enterprises for managing

data quality. Informatica is a company that offers data integration products

for ETL, data masking, data Quality, data replica, data virtualization, master

data management, etc. Informatica

ETL is the most commonly used Data integration tool for connecting and

fetching data from different data sources.

Talend Data

Quality:

Talend

offers data quality capabilities within its data integration platform. It

enables users to profile, cleanse, and enrich data as part of their data

integration workflows.

SAS Data

Management:

SAS

Data Management provides a comprehensive suite of tools for data quality, data

governance, and data integration. It's a powerful solution for organizations

with complex data quality requirements.

Microsoft Data

Quality Services (DQS):

DQS is part of the SQL Server suite and provides data quality features. It allows users to build knowledge bases and perform data cleansing and validation.

Melissa Data Quality Suit:

Melissa Data offers a range of data quality tools for address validation, email verification, and identity verification. It's particularly useful for organizations dealing with customer data.

Conclusion:

Data

Quality Management is a critical component of any organization's data strategy.

By implementing the right techniques and tools, businesses can ensure their

data is accurate, consistent, and trustworthy. Clean data not only supports

better decision-making but also enhances customer satisfaction and compliance

with data-related regulations. Whether you choose open-source solutions or

commercial software, investing in data quality management is an investment in

the success of your data-driven initiatives.

While AI and ML are game-changers, they shouldn't replace human expertise. Their true value lies in augmenting human capabilities. Data analysts and data quality specialists will continue to play a crucial role in defining data quality standards, overseeing AI/ML models, and handling complex data issues.

By embracing these trends, organizations can unlock the true potential of their data. With robust DQM practices in place, businesses can make data-driven decisions with confidence, leading to a significant competitive edge. So, stay ahead of the curve and elevate your DQM strategy in 2024!

Dot Labs is an IT outsourcing firm that offers a range of services, including software development, quality assurance, and data analytics. With a team of skilled professionals, Dot Labs offers nearshoring services to companies in North America, providing cost savings while ensuring effective communication and collaboration. Visit our website: www.dotlabs.ai, for more information on how Dot Labs can help your business with its IT outsourcing needs. For more informative Blogs on the latest technologies and trends click here