A data warehouse is a type

of data management system that is designed to

enable and support business intelligence (BI) activities, especially analytics.

Data warehouses frequently hold vast amounts of historical data and are used

only for queries and analysis. Application log files and transaction apps are

just two examples of the many different sources from which the data in a data

warehouse is typically gathered.

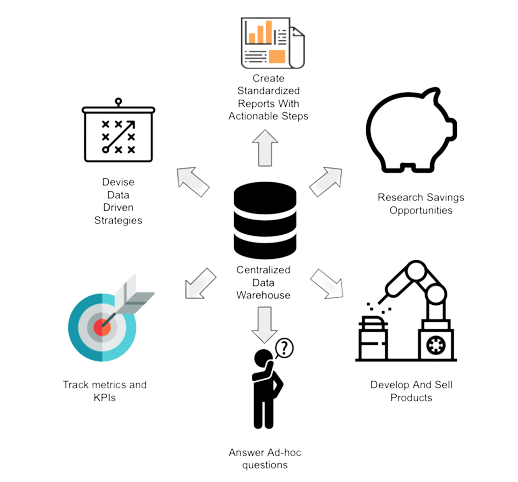

A data warehouse is used to

centrally store and consolidate large amounts of data from multiple sources.

Its analytical capabilities allow organizations to derive valuable business

insights from their data to improve decision-making. Over time, it builds a

historical record that can be invaluable to data scientists and business

analysts. Because of these capabilities, a data warehouse can be considered an

organization’s “single source of truth.”

In today's data-driven world, the volume and complexity of data generated by businesses are growing exponentially. As organizations strive to harness the power of big data for insights and decision-making, data warehousing has become a critical component of their data strategies. However, managing big data in a data warehousing environment comes with its own set of challenges and requires innovative solutions. In this article, we'll explore the challenges and offer solutions for data warehousing in the era of big data.

Benefits of a Data Warehouse

Data warehouses offer the

predominant and unique advantage of allowing organizations to analyze large

amounts of variant data and extract significant value from it, as well as to

keep a historical record.

By the implication of a data

warehouse, the organizations are provided with a predominant and unique

advantage of analyzing large amounts of variant data and fetching essential

values from the data, as well as keeping the historical data intact.

Four unique characteristics as described

by computer scientist William Inmon, allow data warehouses to deliver this

overarching benefit. According to this definition, data warehouses are:

o

Subject-oriented: Data warehouses can analyze

data about a particular subject or functional area such as sales of a company

or firm.

o

Integrated: Data warehouses create

consistency among different data types from incongruent sources.

o

Nonvolatile: Once data is in a data

warehouse, it’s stable and doesn’t change. Such that with the addition of new

data the previous data is not replaced, omitted, or discarded.

o

Time-variant: Data warehouse analysis looks

at change over time.

A well-designed data warehouse will

respond rapidly to queries, enable high data throughput, and provide enough

flexibility for end users to “slice and dice” or reduce the volume of data for

closer examination to meet a variety of demands, whether at a high level or at

a very fine, detailed level. The data warehouse serves as the functional

foundation for middleware BI environments that provide end users with reports,

dashboards, and other interfaces.

Data warehousing is incorporated in

almost all the fields where record keeping is essential for data-driven

decision-making, such as:

o

Financial

services

o

Banking

services

o

Consumer

goods

o

Retail

sectors

o Controlled manufacturing

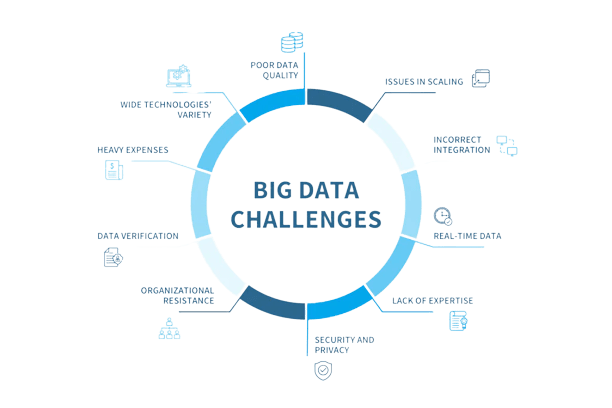

Challenges faced with Big Data Warehousing:

Scalability: Big data can overpower traditional

data warehousing solutions. Maybe the warehouse developed is not capable of

catering to the large data volume that is being injected into it. Scaling to

accommodate massive data volumes without compromising performance is a

significant challenge being faced with storing Big data. The data engineers

should consider cloud-based data warehousing solutions that offer elastic

scalability, allowing you to adapt to changing data requirements. Such that the

system must be made in such a way that it is capable of catering to varied

volumes of data.

Data

Variety: Big

data comes in various formats, from structured to unstructured data.

Integrating and querying diverse data types can be complex. To cope with such

an issue the engineers should incorporate data transformation tools and

schema-on-read approaches to handle and analyze diverse data sources.

Data

Ingestion:

Efficiently ingesting large volumes of data from numerous sources while

ensuring data quality and consistency can be a daunting task. Manually managing,

ingestion, and manipulation of data is time-consuming and may be costly. To

cope up with this problem the company should employ data integration tools and

automated data cleansing processes to streamline data ingestion.

Data

Processing:

Processing big data for analytics and reporting requires robust processing

capabilities to deliver timely results. It can be difficult to maintain data

quality in a traditional data warehouse structure. Manual errors and

missed updates can lead to corrupt or obsolete data. Certainly, this leads to

issues with data-driven decision-making and causes imprecise data analysis for

users pulling data from your warehouse.

Automation of the data processing can reduce the

chances of human error and inaccuracies in entering the data. We should leverage

parallel processing and distributed computing frameworks to handle data

processing at scale.

Data

Security:

Protecting sensitive data is crucial, and maintaining security in a big data

warehouse can be challenging. By

implementing robust encryption, access controls, and data governance

policies to safeguard data one can ensure data security in Big data warehouses.

Data Governance: Maintaining data quality, and compliance, and ensuring data lineage is complicated in big data environments. Siloed Data Governance Efforts are Bound to Struggle. Governance efforts have little impact if they are not designed with people, processes, and technology in mind. Data governance frameworks must be holistic, based on cross-functional collaboration, shared terminology, and a common set of standards and metrics. Establish comprehensive data governance frameworks and metadata management to track data lineage and enforce data standards.

Solutions:

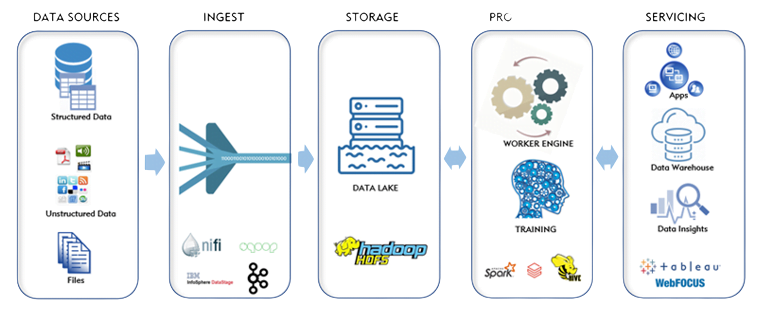

Data

Lake Integration:

By integrating data lakes with data warehousing solutions we can accommodate

diverse data types. This approach allows for the storage of raw data and

on-the-fly processing, enabling better flexibility in data handling.

Parallel

Processing: We

can utilize parallel processing frameworks such as Apache Hadoop and Apache

Spark to distribute data processing tasks, which can significantly improve the

system’s performance.

Cloud

Data Warehousing:

Cloud-based data warehousing platforms like Amazon Redshift, Google BigQuery,

and Snowflake offer scalability and cost-effective solutions, enabling

businesses to adapt to changing data needs.

Data

Governance Tools:

Implement data governance tools that help maintain data quality, ensure

compliance, and monitor data lineage, making it easier to manage big data in a

controlled environment.

Advanced

Analytics:

Leverage advanced analytics and machine learning to extract valuable insights

from big data within your data warehousing platform, enhancing decision-making

capabilities.

Data

Compression and Storage Optimization:

Use data compression techniques and storage optimization strategies to reduce

storage costs and enhance performance.

Real-time Data Integration: Implement real-time data integration solutions to keep data up-to-date and relevant for analytical purposes. Through the analysis of event logs produced milliseconds just after they are formed, real-time big data analytics assists organizations in mitigating attacks as they occur. With real-time data integration, you can get insights about your company on business intelligence (BI) platforms immediately

Summary:

Data warehousing for big data is a complex but

necessary endeavor for organizations looking to unlock the potential of their

data. By addressing the challenges with innovative solutions and embracing

modern data warehousing practices, businesses can gain a competitive edge by

harnessing the power of big data for strategic decision-making, analytics, and

business intelligence. In this data-driven age, the ability to navigate the

complexities of big data is paramount for success.

Dot Labs is an IT outsourcing firm that offers a range of services, including software development, quality assurance, and data analytics. With a team of skilled professionals, Dot Labs offers nearshoring services to companies in North America, providing cost savings while ensuring effective communication and collaboration. Visit our website: www.dotlabs.ai, for more information on how Dot Labs can help your business with its IT outsourcing needs. For more informative Blogs on the latest technologies and trends click here